Last year, I did a performance comparison for VC7, VC9 (with and without SCL), and STLPort. Now that VC10 is out, I wonder if it is worth the upgrade.

So I dusted off the benchmark code from last year and upgraded the solution to VC10. This time, I would like to see how VC9, VC10, and STLPort 5.2.1.

VC8 and VC9’s Secure SCL “feature” was disastrous to many C++ programmers who cares about performance. So this test is done with Secure SCL disabled.

With all the C++0x language upgrades and performance claims in VC10, I expect improvements.

The Results

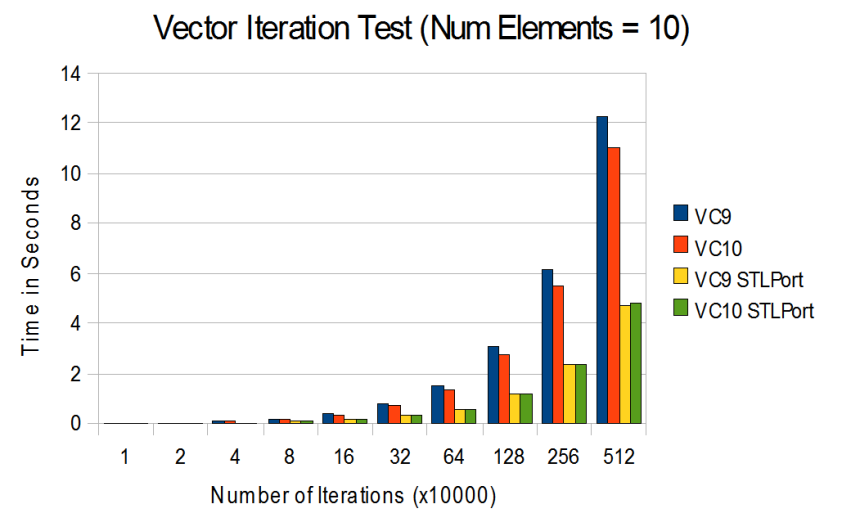

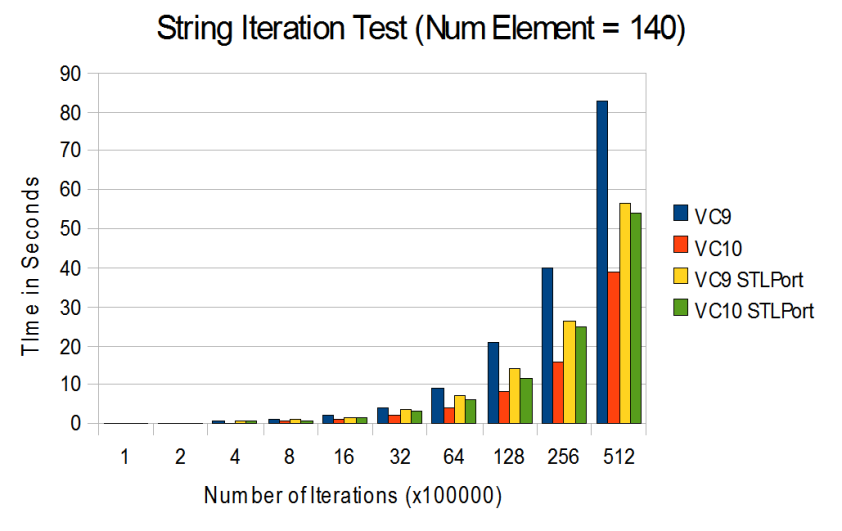

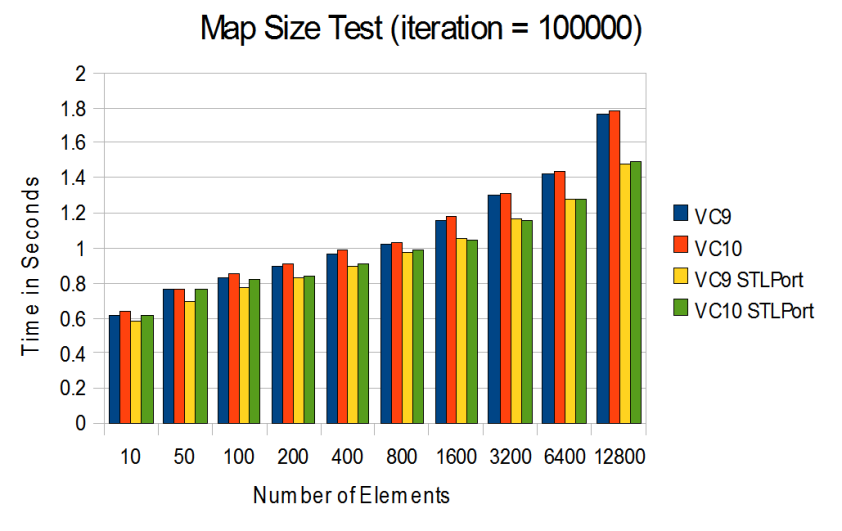

Recall: The stress test I wrote last year benchmark against 1. performance under growing container sizes, and 2. running a large number of operation while keeping container size a constant.

Recall: The test for vector involves three operations – insertion, iterator traversal, and copy.

Recall: The test for string involves three operations – string copy, substring search, and concatenation.

Recall: The test for map involves insertion, search, and deletion.

Recall: The test for Deque comes with a twist. The deque is implemented is as a priority queue through make_heap(), push_heap() and pop_heap(). Random items are inserted and removed from the queue upon each iteration.

Conclusion

STL implementation in VC10 definitely shows some improvements over its predecessor. It has shrunk the gap against STLPort. But at the same time, it still have a bit more to go.

There is an average of 2.5% improvement comparing STLPort compiled with VC9 and STLPort compiled with VC10. So upgrading to VC10 will provide a performance gain even for those who don’t use STL.

I wasn’t disappointed or impressed by the improvements. So I guess it was within my expectations.

Source and data sheet can be downloaded here.

Tools: Visual Studio 2008 (VC9), Visual Studio 2010 (VC10), STLport 5.2.1

Machine Specification: Intel i5-750 with 4GB of RAM. Window 7.