In multi-threaded programming, the lost-wakeup problem is a subtle problem that causes a thread to miss a wake-up due to race condition.

In the obvious case, the thread that misses a wake-up would sleep forever and no work is performed.

But often, a thread that missed a wake-up could be recovered by a subsequent wake-up. To the outside world, the post-condition looks identical, and the lost-wakeup problem stays under the radar.

This is scary because the worst kind of software bug you can have is the kind that exists, and you know nothing about it, including its existence.

A Simple Locked Queue

Consider a simple thread-safe queue that supports push() and pop().

The function pop() guarantees to return a item. If the queue is empty, it would block and wait on a “not empty” condition until an item exists.

The function push() guarantees that the item is inserted in the front of the queue. It notifies a thread the “not empty” condition when the queue goes from “empty” to “not empty”.

Guess what? This queue suffers from the lost-wakeup problem.

// generic thread-safe queue that supports push() and pop()

template <typename T>

class locked_queue : noncopyable

{

public:

// push an item into the end of the queue

void push(T const &item)

{

mutex::scoped_lock lock(m_mutex);

m_queue.push(item);

// queue went from empty to one, notify a thread

if(m_queue.size() == 1)

{

m_notEmptyCondition.notify_one();

}

}

// pop the oldest item out of the queue

// blocks until there are item to be popped

T pop()

{

mutex::scoped_lock lock(m_mutex);

while(m_queue.empty() == true)

m_notEmptyCondition.wait(lock);

T item = m_queue.front();

m_queue.pop();

return item;

}

private:

queue<T> m_queue;

mutex m_mutex;

condition_variable m_notEmptyCondition;

};

The Test Case

To prove that locked_queue suffers the lost-wakeup problem, we can write a small test case to simulate a race condition.

The test case involves a producer thread, and two consumer threads. Two consumers are up and waiting for items. The producer thread then pushes two items in the queue. If the queue works properly, since two items are pushed into the queue, both consumer thread should wakeup and completes its function.

// producer that pushes two items to a queue

void producer(locked_queue_t *q)

{

q->push(1);

q->push(2);

}

// consumer that pops an item from the queue

void consumer(locked_queue_t *q)

{

int i = q->pop();

std::cout << i << std::endl;

}

int main()

{

locked_queue_t lq;

// set up two consumers to wait for items

thread c1(bind(&consumer, &lq));

thread c2(bind(&consumer, &lq));

// sleep for a second to ensure c1 and c2 are ready

// not the best code, but good enough for a test case

Sleep(1000);

// create the producer to push in two items

thread p(bind(&producer, &lq));

c1.join();

c2.join();

p.join();

return 0;

}

Here’s the output of the test case on my machine.

1 //wait forever and does not exit

Test Case Step-By-Step Replay

Here’s the step-by-step replay on exactly what happened in our test case.

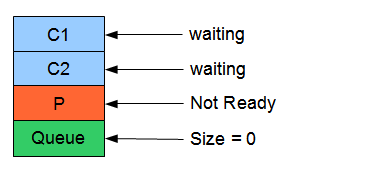

1. First, we create two consumer threads C1 and C2. Since the queue is initially empty, C1 and C2 must block and wait on the “not empty” condition.

Step 1

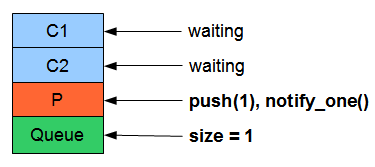

2. Then producer thread P starts. P immediately pushes an item into the queue, and then notifies a thread.

Step 2

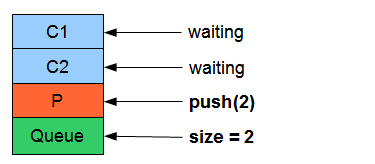

3. This step is the key to the lost-wakeup. After P notifies a thread with the “not empty” condition, it beats the consumer thread and immediately add another item to the queue. Notice that the queue size is now 2, and because the queue was not empty, the “not empty” condition was not fired. At this point, two threads are waiting for data, and only one would be woken up.

Step 3

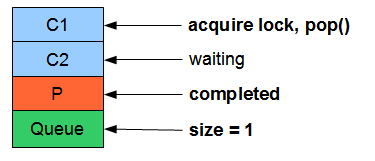

4. At last, C1 was woken up. It acquires the lock, pops the queue, and completes its operation. However, C2 will stay asleep forever.

Step 4

The Solution

The root of the problem is from a premature optimization where the “not empty” condition is only notified when the queue size goes from 0 to 1. We can simply change push() to always call notify_all(), and this problem goes away.

void push(T const &item)

{

mutex::scoped_lock lock(m_mutex);

m_queue.push(item);

// always notify regardless of the queue size

m_notEmptyCondition.notify_all();

}

Final Thoughts

The original locked_queue works perfectly if there is only one producer and one consumer. If there is more than one producer or consumer, lost-wakeup problem exists.

What I’ve demonstrated here is only one variant of the lost-wakeup problem. In practice, there can be far more complex and subtle.

To avoid lost-wakeup, here’s two best practices to follow.

- Its best to always signal all threads that are waiting for a condition. It might appear to be wasteful, but it is a lot better than losing a wake-up.

- A timed wait is an alternative way to solve this problem. This would force a thread to wait up periodically to check for the condition, regardless whether or not it has been signaled.

Source

The source and the data sheet can be downloaded here.

Tools: Visual Studio 2008, Boost 1.41, Window 7, Intel I5-750 (quad core)