After I learned that Compare-And-Swap (CAS) has an infinite consensus number, I felt like I can rule the world with it. Well, maybe not quite, but I felt pretty darn good.

I got the urge to utilize the power of CAS, so I spent some time looking into its usage in the real-world of mutual exclusion.

Just so happens, I bumped into a chapter in the textbook that describes a spin lock algorithm called the Test-And-Test-And-Set (TTAS) lock.

The algorithm is succinct and it is elegantly solved by CAS.

TTASLock

In a mutual exclusion protocol, only one thread can acquire the lock.

If it acquired the lock, it may access the critical region, get its job done, and get out of critical region.

If it can’t acquire the lock, there are two choices. It can repeatedly test the lock – spinning, or it yields its slice of time to another thread and come back later – blocking.

If the contention is low, and spin locks might make sense.

In the test-and-test-and-set algorithm, the mutex is first tested to see if it is free. If it appears to be free, we perform test-and-set (CAS) on mutex.

The two tests idea is similar to the double-checked locking optimization to reduce the CAS overhead.

Here is my implementation of the TTAS lock in C++. It is a scoped lock that utilizes the Win32 Interlocked Variable Access API to perform atomic synchronization.

// atomic read is just a volatile read in C

inline LONG atomicRead(volatile LONG *mem) { return *mem; }

class CTtasLock

{

public:

// mutex class that holds the lock state

class CTtasMutex

{

public:

CTtasMutex() : m_state(0) {};

LONG m_state;

LONG const static Locked = 1;

LONG const static NotLocked = 0;

};

// RAII constructor that acquire lock upon construction

explicit CTtasLock(CTtasMutex&m) : m_mutex(m) { lock(); }

// RAII destructor that performs the "scoped" lock behavior

~CTtasLock() { unlock(); }

// spin lock that performs the test-test-and-set algorithm

void lock()

{

while(true)

{

// test until state is cleared

while(atomicRead(&m_mutex.m_state) == CTtasMutex::Locked){};

// test-and-set

if(InterlockedCompareExchange(

&m_mutex.m_state,

CTtasMutex::Locked,

CTtasMutex::NotLocked) == CTtasMutex::NotLocked)

{

return;

}

}

}

// unlock the mutex with atomic set

void unlock()

{

InterlockedExchange(&m_mutex.m_state, CTtasMutex::NotLocked);

}

private:

CTtasMutex &m_mutex;

};

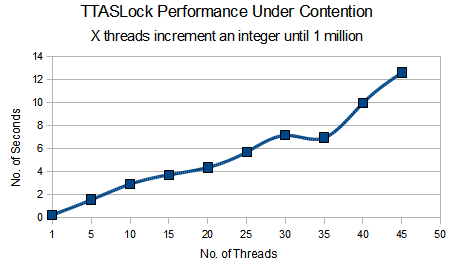

Performance Under Contention

So how well does TTASLock work under contention?

Unfortunately, not very well at all. 😦

Multiple thread race to increment a shared integer value to one million. As the number of thread increases, contention increases, and performance decreases.

As the number of threads increases, there are more contentions and more cycles are wasted spinning. And since the TTASLock spins on a shared mutex, each spinning thread encounters a cache miss whenever a thread acquires the lock and writes to the mutex. Thus, the increase of contention also leads to the increase in cache misses.

And worse yet, the first test in TTAS has almost no benefit in practice because the performance is dominated by cache misses.

[Update: See ttas-spinlock-with-yield for a more scalable solution]

Thoughts and Conclusions

Although TTAS lock has performance issues in practice, I enjoy its simplicity.

The cache misses issue can be relieved if each thread observes a different counter, and ensure that the counter are far enough that they are on different cache lines.

Source

The source and the data sheet can be downloaded here.

Tools: Visual Studio 2008, Boost 1.41, Window 7

Amazing information, your blog rocks!!!

each spinning thread encounters a cache miss whenever a thread acquires the lock and writes to the mutex. Thus, the increase of contention also leads to the increase in cache misses.

You think thats true? In my opinion you dont use any cache, because you always use the volatile read. So you always read back from mainmemory which is the reason your algorithm is slow, isn t it?

Hi M.T.

“each spinning thread encounters a cache miss whenever a thread acquires the lock and writes to the mutex. ”

Yes, I agree.

“Thus, the increase of contention also leads to the increase in cache misses.”

Yes, I agree as well.

“In my opinion you dont use any cache, because you always use the volatile read. So you always read back from mainmemory which is the reason your algorithm is slow, isn t it?”

That’s an interesting question.

First of all, I can’t use any cache because the data is not protected. I need to ensure visibility of m_mutex.m_state for all thread. If data is cached, very bad things can happen.

Next, I am not sure if it is the “main” reason why the algorithm is slow. I think it is a combination of what you said, and the number of times this is being done.

When the mutex flag is being polled, it burns up CPU cycle. I believe I tested this on a quad core processor. If there are more than 4 threads running concurrently, some threads are going to lose and have to wait in line.

Have you seen my experiment with “TTAS with Yield”? Just a simple backoff algorithm results in excellent performance.

Thanks for the feedback.

… Alan

Note that spinlocks in general are only useful when you have limited contention _and_ critical sections are short. If these are respected, TTAS works great as it is!

Paolo,

Yes, I agree. In my example, the contention is extremely high. In a real world perspective, it definitely misuses TTAS.

Thanks for the feedback.

… Alan

if it’s interesting for you , you can read this presentation too: http://www.cs.tau.ac.il/~afek/Spin%20Locks%20and%20Contention.ppt